Difference between revisions of "Template:FriendlyCoreAllwinner-DVPCam"

(updated by API) |

(updated by API) |

||

| (13 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | {{#switch: {{{1}}} | ||

| + | | NanoPi-M1|NanoPi-M1-Plus|NanoPi-NEO-Air = | ||

| + | For {{{1}}} the CAM500B can work with both Linux-3.4 Kernel and Linux-4.14 Kernel.<br> | ||

The CAM500B camera module is a 5M-pixel camera with DVP interface. For more tech details about it you can refer to [[Matrix - CAM500B]].<br> | The CAM500B camera module is a 5M-pixel camera with DVP interface. For more tech details about it you can refer to [[Matrix - CAM500B]].<br> | ||

| − | connect your | + | | NanoPi-K1-Plus = |

| + | For {{{1}}} the CAM500B can work with Linux-4.14 Kernel.<br> | ||

| + | The CAM500B camera module is a 5M-pixel camera with DVP interface. For more tech details about it you can refer to [[Matrix - CAM500B]].<br> | ||

| + | | NanoPi-Duo2 = | ||

| + | For {{{1}}} the OV5640 can work with Linux-4.14 Kernel.<br> | ||

| + | The NanoPi-Duo2 has support for OV5640 cameras and you can directly connect an OV5640 camera to the board. Here is a hardware setup:<br> | ||

| + | [[File:duo2-ov5640.png|frameless|500px|duo2-ov5640]] <br> | ||

| + | }} | ||

| + | |||

| + | connect your board to camera module. Then boot OS, connect your board to a network, log into the board as root and run "mjpg-streamer": | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

| − | $ cd /root/mjpg-streamer | + | $ cd /root/C/mjpg-streamer |

$ make | $ make | ||

$ ./start.sh | $ ./start.sh | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | You need to change the start.sh script and make sure it uses a correct /dev/videoX node. You can check your camera's node by running the following commands: | |

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

$ apt-get install v4l-utils | $ apt-get install v4l-utils | ||

| Line 14: | Line 26: | ||

Card type : sun6i-csi | Card type : sun6i-csi | ||

Bus info : platform:camera | Bus info : platform:camera | ||

| − | Driver version: 4. | + | Driver version: 4.14.0 |

... | ... | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| − | + | The above messages indicate that "/dev/video0" is camera's device node.The mjpg-streamer application is an open source video steam server. After it is successfully started the following messages will be popped up: | |

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

$ ./start.sh | $ ./start.sh | ||

| Line 31: | Line 43: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| + | start.sh runs the following two commands: | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | export LD_LIBRARY_PATH="$(pwd)" | ||

| + | ./mjpg_streamer -i "./input_uvc.so -d /dev/video0 -y 1 -r 1280x720 -f 30 -q 90 -n -fb 0" -o "./output_http.so -w ./www" | ||

| + | </syntaxhighlight> | ||

| + | Here are some details for mjpg_streamer's major options:<br> | ||

| + | -i: input device. For example "input_uvc.so" means it takes input from a camera;<br> | ||

| + | -o: output device. For example "output_http.so" means the it transmits data via http;<br> | ||

| + | -d: input device's subparameter. It defines a camera's device node;<br> | ||

| + | -y: input device's subparameter. It defines a camera's data format: 1:yuyv, 2:yvyu, 3:uyvy 4:vyuy. If this option isn't defined MJPEG will be set as the data format;<br> | ||

| + | -r: input device's subparameter. It defines a camera's resolution;<br> | ||

| + | -f: input device's subparameter. It defines a camera's fps. But whether this fps is supported depends on its driver;<br> | ||

| + | -q: input device's subparameter. It defines the quality of an image generated by libjpeg soft-encoding;<br> | ||

| + | -n: input device's subparameter. It disables the dynctrls function;<br> | ||

| + | -fb: input device's subparameter. It specifies whether an input image is displayed at "/dev/fbX";<br> | ||

| + | -w: output device's subparameter. It defines a directory to hold web pages;<br><br> | ||

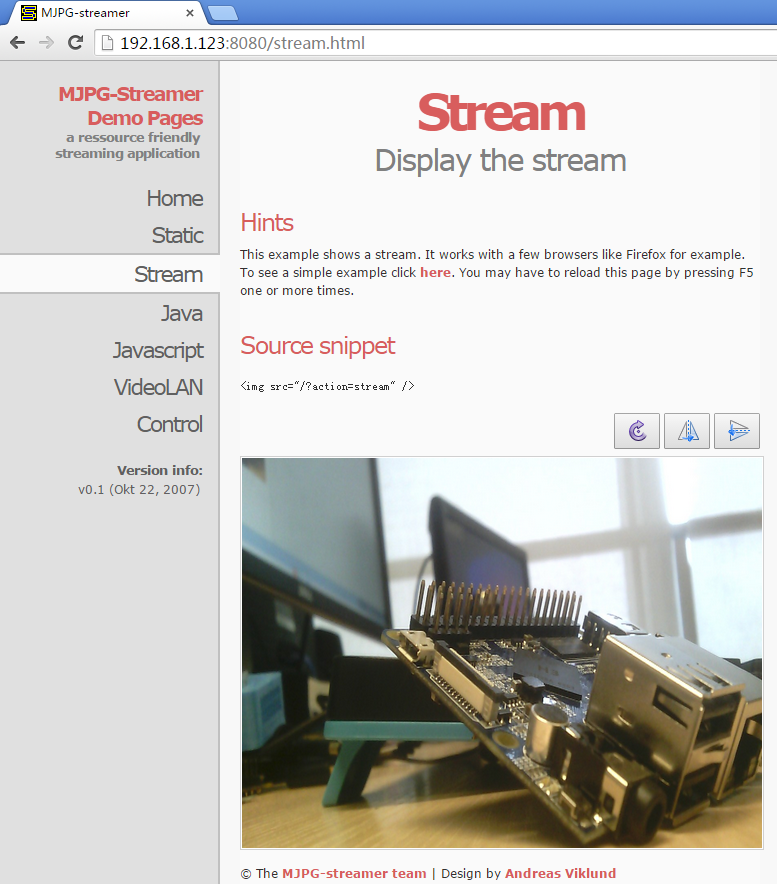

In our case the board's IP address was 192.168.1.230. We typed 192.168.1.230:8080 in a browser and were able to view the images taken from the camera's. Here is what you would expect to observe:<br> | In our case the board's IP address was 192.168.1.230. We typed 192.168.1.230:8080 in a browser and were able to view the images taken from the camera's. Here is what you would expect to observe:<br> | ||

[[File:mjpg-streamer-cam500a.png|frameless|400px|mjpg-streamer-cam500a]] <br> | [[File:mjpg-streamer-cam500a.png|frameless|400px|mjpg-streamer-cam500a]] <br> | ||

| − | mjpg- | + | The mjpg-streamer utility uses libjpeg to software-encode steam data. The Linux-4.14 based ROM currently doesn't support hardware-encoding. If you use a H3 boards with Linux-3.4 based ROM you can use the ffmpeg utility to hardware-encode stream data and this can greatly release CPU's resources and speed up encoding: |

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

$ ffmpeg -t 30 -f v4l2 -channel 0 -video_size 1280x720 -i /dev/video0 -pix_fmt nv12 -r 30 \ | $ ffmpeg -t 30 -f v4l2 -channel 0 -video_size 1280x720 -i /dev/video0 -pix_fmt nv12 -r 30 \ | ||

Latest revision as of 05:27, 14 January 2019

connect your board to camera module. Then boot OS, connect your board to a network, log into the board as root and run "mjpg-streamer":

$ cd /root/C/mjpg-streamer $ make $ ./start.sh

You need to change the start.sh script and make sure it uses a correct /dev/videoX node. You can check your camera's node by running the following commands:

$ apt-get install v4l-utils $ v4l2-ctl -d /dev/video0 -D Driver Info (not using libv4l2): Driver name : sun6i-video Card type : sun6i-csi Bus info : platform:camera Driver version: 4.14.0 ...

The above messages indicate that "/dev/video0" is camera's device node.The mjpg-streamer application is an open source video steam server. After it is successfully started the following messages will be popped up:

$ ./start.sh i: Using V4L2 device.: /dev/video0 i: Desired Resolution: 1280 x 720 i: Frames Per Second.: 30 i: Format............: YUV i: JPEG Quality......: 90 o: www-folder-path...: ./www/ o: HTTP TCP port.....: 8080 o: username:password.: disabled o: commands..........: enabled

start.sh runs the following two commands:

export LD_LIBRARY_PATH="$(pwd)" ./mjpg_streamer -i "./input_uvc.so -d /dev/video0 -y 1 -r 1280x720 -f 30 -q 90 -n -fb 0" -o "./output_http.so -w ./www"

Here are some details for mjpg_streamer's major options:

-i: input device. For example "input_uvc.so" means it takes input from a camera;

-o: output device. For example "output_http.so" means the it transmits data via http;

-d: input device's subparameter. It defines a camera's device node;

-y: input device's subparameter. It defines a camera's data format: 1:yuyv, 2:yvyu, 3:uyvy 4:vyuy. If this option isn't defined MJPEG will be set as the data format;

-r: input device's subparameter. It defines a camera's resolution;

-f: input device's subparameter. It defines a camera's fps. But whether this fps is supported depends on its driver;

-q: input device's subparameter. It defines the quality of an image generated by libjpeg soft-encoding;

-n: input device's subparameter. It disables the dynctrls function;

-fb: input device's subparameter. It specifies whether an input image is displayed at "/dev/fbX";

-w: output device's subparameter. It defines a directory to hold web pages;

In our case the board's IP address was 192.168.1.230. We typed 192.168.1.230:8080 in a browser and were able to view the images taken from the camera's. Here is what you would expect to observe:

The mjpg-streamer utility uses libjpeg to software-encode steam data. The Linux-4.14 based ROM currently doesn't support hardware-encoding. If you use a H3 boards with Linux-3.4 based ROM you can use the ffmpeg utility to hardware-encode stream data and this can greatly release CPU's resources and speed up encoding:

$ ffmpeg -t 30 -f v4l2 -channel 0 -video_size 1280x720 -i /dev/video0 -pix_fmt nv12 -r 30 \ -b:v 64k -c:v cedrus264 test.mp4

By default it records a 30-second video. Typing "q" stops video recording. After recording is stopped a test.mp4 file will be generated.